Science fiction has a curious way of being prophetic. We can only hope that stories like "Matrix ", "Terminator ", "I", "Robot" and others, missed the mark when they suggested that robots and computers would rebel against us and either use them as batteries or fertilizer for the plants they would crush with their robot steps.

If you're worried about the rise of machines, consider the fact that there are things humans do better than machines. There's even one thing a bird can do better. They may not save our species, but who knows.

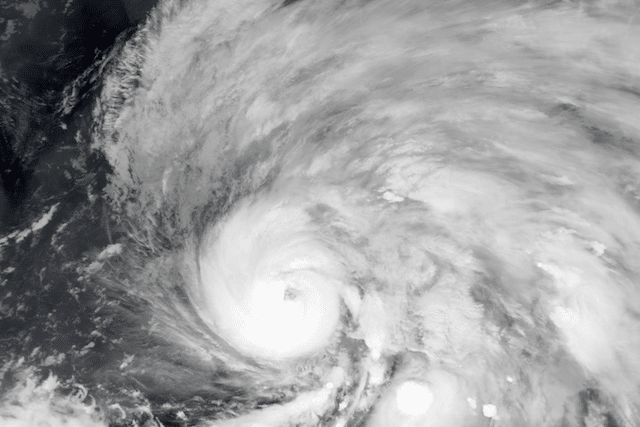

10. Veery Birds are better at predicting hurricanes than machines

Hurricane forecasting is a big deal. We now rely on a variety of systems to help us determine if a hurricane is coming, including things like satellites, radar, even ships and buoys in the oceans. With all this technology, we can predict a hurricane about 36 to 48 hours in advance.

When it comes to long-term forecasts, we have nothing better than chance. We can predict that hurricanes will come during hurricane season, but so what? That’s like predicting that the sun will rise tomorrow. For more certainty, we can turn away from computers and turn to birds. Weary birds have an uncanny ability to predict severe hurricane weather, as evidenced by their breeding seasons. These birds live in southern Canada and the northern United States and have one clutch of eggs per breeding season.

In years when hurricane seasons are severe, viris will shorten their breeding seasons, even if they have not yet been successful. They will do this several months before the hurricane season, but they are clearly related.

In 2018, an ornithologist predicted a particularly strong hurricane season, while meteorological data predicted the opposite. Weather scientists insisted it would be a mild year. They used the ACE number, which represents the accumulated energy of a cyclone, and predicted anywhere from 60, which is quite low, to a maximum of 103, which is below average.

An ornithologist who had never predicted the weather in his life suggested anywhere from 70 to as many as 150. The answer was 129. He arrived at his prediction by observing the behavior of the viri based on 20 years of observing their behavior in the wild.

9. People are great players, but they don't work well in teams.

The global gaming market is an absolute juggernaut. It’s predicted to be worth around $257 billion by 2025. That’s Elon Musk’s 93%! That kind of money has inspired a lot of amazing innovation in technology, including graphics and artificial intelligence. And computers are not only lagging behind gaming, they’re sometimes even ahead of it.

Artificial intelligence has proven itself to be a better gamer than the average human, even though it's a relatively new development. But take this with a grain of salt. In a strictly numbers-driven style of play, the computer can often do a better job than the guy from Idaho who keeps insulting your mother while you play Call of Duty. However, when the game gets cooperative and requires teamwork, the AI starts to show some weaknesses. Namely, AI sucks at teamwork.

Human players generally express frustration when it comes to interacting with AI teammates. The study found that an AI teammate did not improve game performance compared to playing with a pre-programmed computer partner who was designed to simply know the rules of the game and play a certain way. But the big difference was that human partners hated working with the AI. The computer was seen as unreliable, unpredictable, and untrustworthy — three things you don’t want in a gaming partner.

8. Computer translations are usually quite sloppy.

Have you ever come across a word or phrase in another language and then translated it online? And then taken the time to translate it back to English only to find that it was essentially gibberish? That’s because computers are really bad at translation. Translation programs are notoriously bad at picking up on context and other nuances of language, making computer translators rudimentary at best and useless at worst.

Things like slang, cultural context, proper names, and more get lost in machines. Consider something like the word “set.” According to the Guinness Book of World Records, this word has over 430 possible meanings. The machine needs context clues to figure out how they’ll be used in a given translation, which is no easy task.

Idioms are usually translated literally by a machine, even a very advanced one. Even single words can change the tone of entire sentences, and this can be a problem for AI translators. You may get the gist of the piece, but that's not necessarily what you want, especially if you're reading fiction because you want a story, not just trying to distill the basic facts.

7. Collect fruits

Machines can build cars, computers, and all sorts of machines for us these days, but ironically they have trouble with some of the simpler tasks. For example, they're not that good at picking strawberries. Or many other fruits and vegetables, for that matter.

The reason for the robot's failure is too easy to guess. The robot is not very good at guessing whether it is being too rough or not. Fruits like strawberries and other berries require a light touch. The robot can probably pick nuts until the cows come home, but berries need to be handled with care. Robot harvesters have no way of knowing whether they are squeezing too hard and destroying the crop.

As it turns out, harvesting robots are being developed to get around this problem by picking entire plants, not just berries. They can do the work of 30 people in the same amount of time. But for now, harvesting robots have to scan fields and figure out where to go to find ripe fruit. So far, they can only pick about 50% of ripe fruit, while humans can pick up up to 90%.

6. AI is bad at reading emotions

Facial recognition technology is something that has been in the news for years. People are wary of it because it smacks of a surveillance state and constant monitoring. But another aspect that people are afraid of is the ability of computers to look at you and read you, essentially determining how you are feeling from one moment to the next. This can be used to exploit people for marketing, advertising, and other purposes to make money. Schools in China have used it to determine how children were feeling during distance learning, ostensibly to improve the overall learning experience.

The thing is, emotion-detecting computers aren't very good. Despite what the people who sell the technology insist, there's little evidence that it's very effective. Neuroscientists have stated outright that you can't accurately judge a person's emotional state from their facial expressions.

5. Humans are better soldiers than robots

One of the most controversial uses of AI in the modern world is in the military. Should we trust machines to make life-and-death decisions in a war zone? Is it ethical to allow a robot to take a human life? Most people seem to be against the idea, and the US has already assured us that humans will always make the final decision. However, there is some speculation that the ship has sailed, and autonomous killing machines have already been used in the field. So are robots better soldiers than humans? It depends on what you mean by better.

A machine, even an AI, will do what it is told. Without human emotions and ethics, an AI would likely make a different decision than Stanislav Petrov did in 1983 when he received word that the U.S. military had launched a nuclear strike on the Soviet Union. Petrov did not alert his government to the attack detected by his surveillance station, as he was required to do, but instead investigated further, finding it to be a false alarm. An AI would likely do the opposite, and none of us would be here to discuss it. They lack morality, and they can be unpredictable in how they process data.

Everyone from Elon Musk to Stephen Hawking has warned that AI could doom us all. That's not what a good soldier does.

4. AI has not yet mastered common sense

Most of us have met someone in our lives who is very smart but lacks common sense. We recognize them. You can be a math wizard but still act like an idiot. That's what AI is like. It can be very smart, but it has no common sense.

Common sense is how we describe abductive inference. It’s what allows us to ignore the million silly explanations for things that happen in life and focus on the ones that make the most sense. If you hear a noise upstairs, you might think it’s your spouse or your cat, not an elephant or Gordon Ramsay. The last two options sound silly because you have common sense. AI doesn’t, so it needs to consider them as possibilities.

Modern AI relies on symbolic logic and deep learning. These explain a lot, but they ignore common sense and are the reasons why AI cannot come close to replicating real human intelligence.

3. AI writing programs haven't yet improved human writing

The future of writing may be captured by machines, but they're not quite there yet. AI can write prose, especially things like journalistic articles, but it hasn't quite mastered the human voice yet.

Computers using something called GPT-3, or Generative Pre-trained Transformer 3, can produce text that closely mimics the writing of a real person. It’s very good at certain kinds of writing, but not at others. For example, if you want it to mimic the way a real person speaks, it’s more likely to generate gibberish. It can easily write an article based on facts, but if you want it to mimic a Stephen King story that reads like the real King, it might not feel right. The sentence would be suspect, or it would need serious editing.

The flaw is in the way the technology works. It is based on prediction and pattern matching. So, in general, it can generate general texts well. But when you need a specific text, like Stephen King, it limits the technician's ability to understand what he is trying to say.

2. Fulfillment of factory orders

Believe it or not, what most of us would assume is that a robot would do a much better job than a human is simply not true. In a warehouse setting like Amazon, robots are not as good at fulfilling orders as humans.

In 2019, it was estimated that it would be at least a decade before robots usurped human labor. Robots can pick items for orders if they are large, but small items in baskets tend to get damaged and are not as efficient as when picked by humans.

Elon Musk admitted that Tesla had gone too far with automation and needed to be scaled back because humans were simply better at being flexible and dealing with inconsistencies.

1. Captcha

If there's one thing everyone on the internet knows, it's that robots can't look at nine squares and pick the ones with traffic lights. Captcha tests are a website's last defense against robot intrusion, and they use multiple layers of data, including screen size and resolution, IP address, browsers, plugins, keystrokes, and more, to determine that you are you and not a machine.

If you notice that these tests are getting harder, and the ones where you have to identify garbled text can sometimes even fool you, that's because the robots are actually getting better at the tests, so they have to be harder and harder to beat. In fact, robots are already much better than humans at some tests. But we're still a step ahead for basic programs, and until we develop something better, like the various game-like tests or ink blot puzzles that have been attempted, this should be good enough.

Оставить Комментарий